Sometimes, it is likely that you have very long text files among which it is difficult to find certain patterns or lines or words that are duplicated, or maybe there are a bunch of small text files where you want to match more easily, and even use a pipe and match the output of a command. As well, uniq is the command what are you looking for it.

With uniq you can look for redundant information in a very simple way. In addition, it will also allow you to remove those duplicates if you need to. And, in this tutorial, you will see some examples of the command that you may find useful. Remember that it is installed by default in the vast majority of distros, so you will not have to install the package...

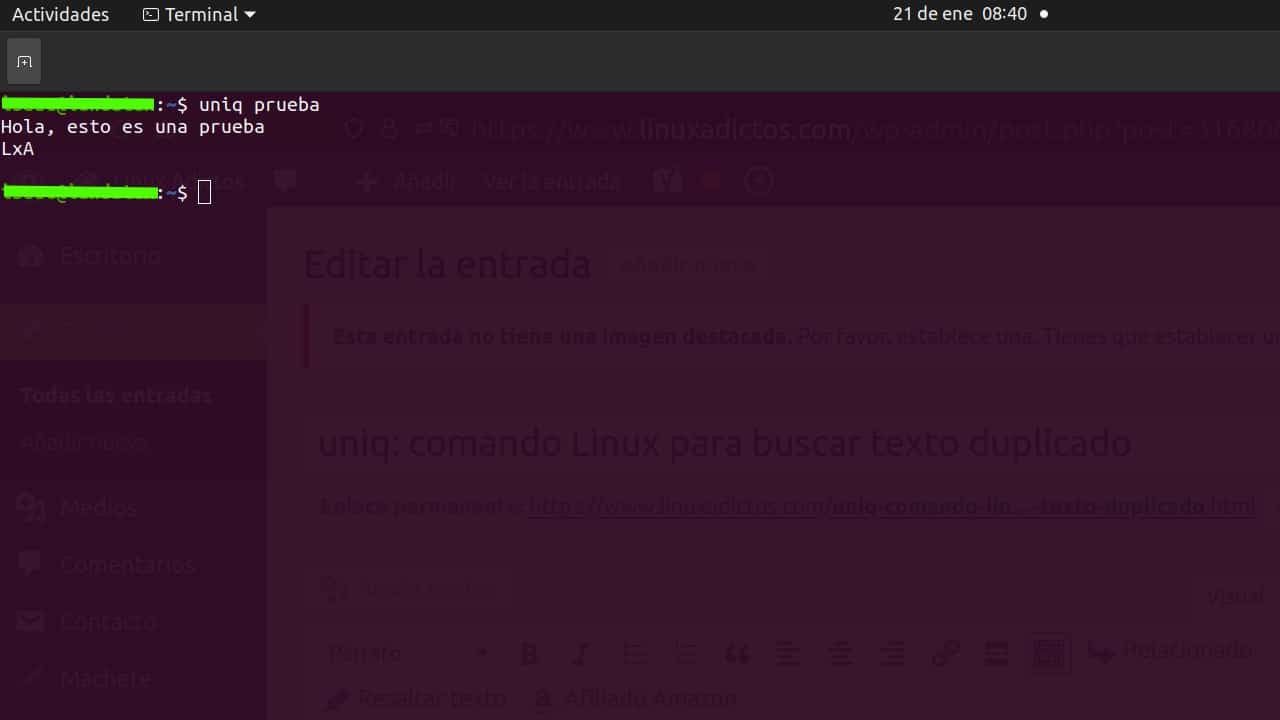

Well, first of all, let's see an example to understand the basics of the uniq command and what it does and what it doesn't do. For example, imagine that you create a text file called test.txt, and inside you put several repeated phrases or words, such as repeating three lines «Hi this is a test» and then use uniq with it:

nano prueba.txt uniq prueba.txt

Well, in that case, the output of the command will be simply:

Salida: Hola, esto es una prueba

That is, put a single line «Hi this is a test» eliminating the other 2 that are the same. But beware, if you use cat again to see the original, you will see that they have not been removed from the file, it has simply removed them from the output:

cat prueba.txt

whose output would be:

Hola, esto es una prueba Hola, esto es una prueba Hola, esto es una prueba

But the uniq command has many more options available. For example, it can tell you the number of times a line is repeated, indicating at the beginning of the line the number of repetitions. For it:

uniq -c prueba.txt

You could also just print repeated lines, and ignore the non-repeated ones:

uniq -d prueba.txt

Or the ones that are not duplicated with the -u option:

uniq -u prueba.txt

To use case-sensitive and be case sensitive, you can use the -i option:

uniq -i prueba.txt

Ok, and how could it be done to create a file with only the unique lines, eliminating all the duplicates at once. Well, it's as simple as using a pipe to pipe the output of uniq to a new text file:

uniq prueba.txt > unicas.txt